Start with camera-based tracking using inexpensive webcams; train a lightweight AI model that translates frames into usable poses, then retarget to a rig in Blender, delivering animation quickly without wearables.

In practice, you can reach 60fps processing on 1080p streams, latency below 90–120 ms, and a median pose error under 5 cm with a pipeline that stays in-house. This інформація underpins досвід, blog posts, and a showcase that demonstrates platform-scale capabilities; the business case grows revenue through faster iteration, reduced hardware costs, and new service offerings to gaming and non-gaming clients alike.

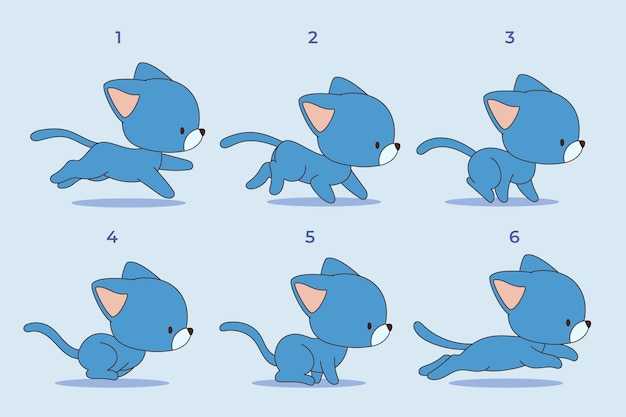

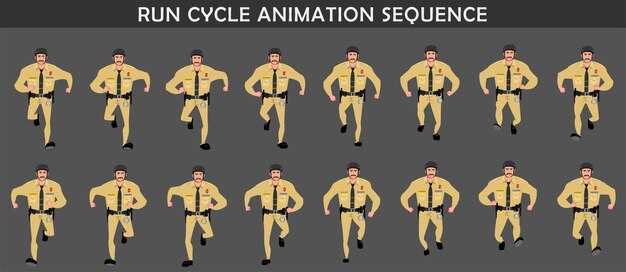

Recommended workflow: frame-by-frame estimation, apply dynamics, blend to a universal rig, bake the moves, export to a target platform, then push assets into Blender, and maintain an audit trail with information that informs the team.

Adopt a modular integration so some in-house studios can evolve a shared pipeline: Python-based tooling to ingest frames, a compact trainer, and a runtime that outputs animation curves to target platform engines such as Unity, Unreal, or Blender. This way, teams have a consistent framework, build information-rich experiences, and publish case studies on a blog to showcase value.

Early in-house pilots show a 2–3x reduction in iteration time, 20–40% hardware savings, and a faster ramp of creative output. The system tracks moves and dynamics with high fidelity, whilst experiences on the blog attract partners who value intuitive tools that feel like gaming. A crisp showcase demonstrates how a studio, with in-house talent, can scale, keep costs predictable, and deliver incredibly efficient animation workflows.

No-Suit AI Motion Capture: Practical Set-Up and Workflow

Install a compact, on-device pipeline with a depth camera paired to a modern GPU laptop to achieve sub-20 ms latency and 60–120 Hz outputs. This setup yields smooth, interactive movement data directly into your digital workflow. Use a single well-aligned camera view to reduce occlusion, and calibrate once per session.

Choose devices that deliver high-quality depth, such as Azure Kinect DK or Intel RealSense, plus an optional inertial module for tricky arms. Pair with a laptop or workstation with at least 16 GB RAM and a discrete GPU (RTX 3060 or better) to keep inference stable under streaming load. If you scale to multiple characters, use a second HDMI port or USB-C dock to maintain a seamless data path, enabling leveraging multiple views to improve image fidelity. A physical reference pose improves scale accuracy, and this setup provides useful data to refine later.

Hardware is useless without a robust software stack. A lightweight AI model trained on studio data can lift cues from image streams into 3D joint positions, enabling fully digital performances. Developers can tune the network with a training set of a few thousand frames and expand using synthetic data to cover clothing, lighting, and landscapes. In practice, this gives a high level of interactive feedback for artists.

Calibrate using a standing neutral pose and a quick scaling reference. Direct streaming from the camera to the inference stage minimises latency, with a post-filter that reduces jitter. Outputs export to your engine via a simple JSON structure that retargets to your character rig, providing image-based pose data that can be baked into animation across every asset.

This workflow supports interactive previews inside the editor, enabling you to tweak parameters live. Use a digital twin preview to adjust timing, apply smoothing, and preserve movement integrity. Streaming to the game engine should be configured for 1080p or 4K previews depending on 'ardware; 1080p 60 Hz is common for real-time iteration, which helps game teams iterate faster.

To ensure safety and consistency, place cameras in stable mounts, avoid occlusion zones, and set a safe desk height; implement a light background and uniform lighting to reduce false positives. Use a multi-view setup where possible to improve accuracy, which increases effectiveness in dynamic scenes like gaming demos and live-streamed events.

In practice, keep a minimal calibration routine after changing spaces. Provide a local streaming path to the engine, reducing dependency on cloud. Use colour-coded feedback to indicate tracking confidence, and log frames for later analysis in training datasets to improve models. This approach delivers flexibility and that's value across diverse teams, making the system useful across every gaming scenario, landscapes, and image streams.

What makes no-suit mocap feasible today?

Start with a markerless tracking stack that fuses multi-view colour cameras, depth sensors, and lightweight inertial units mounted on key body segments. Calculation pipelines integrate streams to produce robust 3D poses fully in real time, with latency typically under 20–40 ms on modern CPUs/GPUs. This combination relies solely on sensors rather than a full-body garment.

Behind this, the effectiveness comes from physics-based filtering, where kinematic constraints and gravity priors tighten estimates. Integrate machine learning priors with geometric optimisation to maintain accuracy when occlusions occur, particularly when limbs cross or are partly hidden by the subject’s body. Credit goes to researchers behind these markerless approaches.

To cover a broad set of tasks, record diverse poses such as athletic moves, yoga postures, and everyday actions; build a pose library and use it to initialise tracking. In projects across studios, games, health apps, and simulation pipelines behind designs, you can reuse data to accelerate calibration.

Integrated hardware, plus add-ons such as extra infrared beacons or body-worn IMUs, can improve robustness; adding these is optional and enhances stability, ensuring compatibility through modular interfaces. Add-ons provide standardised data streams.

Health-first design guides practice: lightweight housings, even weight distribution and breaks after short blocks to maintain comfort. Simplicity in the setup supports faster onboarding and fewer errors, while quiet calibration steps keep operators focused.

Practical steps: deploy 3–4 cameras around the subject at 0.8–3 m distance; calibrate with a neutral pose; run 40–60 fps streams; apply physics-based smoothing; validate outputs on 5–10 projects to verify effectiveness.

Hardware and software you actually need (no suit)

Two to three RGB-D cameras arranged around the subject provide reliable body data for an avatar, without wearing suits. This setup directly yields capture-ready movement data you can import into Blender and other open platforms.

Lighting: three-point setup with diffused key, fill, and back lights. Target 5500–6000K colour temperature and CRI above 90; keep about 500–700 lux on the subject, and avoid flicker from other light sources. This lighting improves spatial fidelity of the data.

Software workflow: Blender, an open platform, supports previs; you can retarget captured data to existing rigs; a lightweight script maps joint angles into the avatar rig.

Testing and validation: run yoga pose sequences to validate joint limits; evaluate in existing scenes; adjust scale, spacing, and timing for natural motion. Deep calibration steps refine alignment across cameras.

Hardware choices: select cameras from reliable brand families; Azure Kinect, Intel RealSense, or quality USB webcams from brands offering strong body-tracking. Ensure devices support 60–120 Hz capture and dependable drivers.

Costs and revenue: a budget kit spans a few hundred to a few thousand pounds depending on scope; open-source tooling reduces upfront cost; this path supports rapid previs in client projects, delivering creative output and revenue.

Camera placement and lighting for clean capture

Place the camera 1.0–1.2 m away, aligned with the torso midline, with the lens at 0.95–1.05 m height and a 15–20° downward tilt. Stabilise on a fixed tripod to prevent drift. In a three-camera setup, form a triangle around the subject with 0.6–0.9 m spacing between lenses and point each toward the chest centre to maximise captured coverage. This baseline yields clean silhouettes in most rooms and remains robust across lighting shifts.

Lighting plan: implement a three-point system. Key light placed at 60–75° to the subject, delivering 1000–1400 lx on the face, colour temperature 5400–5600 K. Use diffusion to soften shadows, with 1–2 stops of attenuation. Fill light at 30–45° opposite side, 300–500 lx, same colour temperature. Backlight at 60–90° behind, 150–250 lx to separate figure from backdrop. Use a neutral background with CRI 95+ from flicker-free LEDs; avoid direct sun by masking windows when needed. This approach yields consistent, high-contrast posture lines suitable to support downstream processing. This setup provides stable, repeatable results across sessions and supports vision-based metrics with high fidelity.

Data flow: captured sessions stored in a central repository; watch recent videos from an existing blog to calibrate the posture model; export to blender-ready formats; use pre-made addons to speed calibration; through this pipeline, share outputs with clients. This enables interactive therapy sessions, facilitating industry-wide performance reviews, and offering robust workflows that run with existing hardware. The approach provides a practical path towards improving industry offerings via high-vision analytics and cross-team collaboration.

| Setup | Distance (m) | Height (m) | Tilt (deg) | Key (lx) | Fill (lx) | Back (lx) | Colour (K) | Нотатки |

|---|---|---|---|---|---|---|---|---|

| Single baseline | 1.0–1.2 | 0.95–1.05 | 15–20 | 1000–1400 | 300–500 | 150–250 | 5400–5600 | diffuse panel; tripod; posture emphasis; captured with high cohesion |

| Tri-camera triangle | 1. 2–1.4 | 0.95–1.05 | 15–25 | 900–1300 | 300–500 | 150–250 | 5400–5600 | Angles maximise coverage, reduce occlusion, improves shared data. |

| Overhead validation | 2.0 | 1.60 | 0 | – | – | – | 5200 | adds top-down confirmation of posture |

From raw video to usable motion data: the data pipeline

Transferred raw video is mapped onto a standardised movement canvas within minutes, enabling quick iteration, seamless integration into product pipelines, and easier collaboration with developers.

Using AI-driven pose estimation, the system detects 2D keypoints on each frame and generates 3D data through a depth model and geometric constraints, delivering per-joint coordinates and confidence metrics.

Calibration aligns coordinate spaces and frame rate, while cleaning removes jitter and occlusions with techniques such as smoothing and physics-informed constraints; the science behind these steps keeps the moves biomechanically plausible.

Retarget data to existing rigs and assets, adjust scale to match user avatars, and preserve integration within the product pipeline; designed to support therapy workflows with safety checks.

Quality checks track results via per-joint error, mean angular deviation, and high-confidence frame rate; across scenes, results guide model improvements, boosting engagement and revenue.

Operational guidance: keep the pipeline modular; enable quick updates by developers; reuse existing assets to accelerate generation of new content; implement privacy and safety controls.

Measuring and improving motion quality: practical metrics and checks

Recommendation: start with a baseline reliability check using live-action clips collected around diverse scenes, then compare AI-powered reconstructions against ground-truth poses; compute pose RMSE (cm) and angular deviation (degrees); set target ranges per joint, actor, and scene, and iterate after fixes.

Key metrics span accuracy, reliability, and robustness. These checks are designed to be repeatable across setups, tools, and teams, helping anyone around a project to tighten quality without added hardware.

- Accuracy and pose fidelity

- Pose accuracy: report root-mean-square error (RMSE) of joint positions in centimetres; target ranges vary by limb length, with wrists and ankles typically in the 2–5 cm band, knees and elbows 3–6 cm, hips 4–8 cm on well-calibrated data.

- Joint-angle accuracy: document mean absolute error in degrees for the main joints (shoulder, elbow, hip, knee, ankle); aim for 3–6 degrees under moderate lighting and standard scenes.

- Pose coverage: ensure a dense spread of captured poses across actions (standing, walking, squatting, bending) to prevent blind spots in the model.

- Ground-truth alignment: use a short live-action sequence with reference landmarks to verify alignment between the reconstructed skeleton and visible silhouette; report reprojection error in pixels for key frames.

- Temporal stability and drift

- Frame-to-frame consistency: measure average pose delta (distance between consecutive frames) and cap drift to under 1.5–3 cm per second depending on activity.

- Drift over clips: track cumulative deviation across a 10–30 second run; target sub-5 cm total drift for typical actions, with tighter bounds for fast sequences.

- Animation lag: quantify latency between live-action motion and reconstructed pose, prioritising sub-100 ms to keep timing credible in live previews.

- Robustness across setups

- Lighting resilience: compare accuracy metrics under three lighting scenarios (bright, medium, low); ensure changes stay within ±20% of baseline errors.

- Background complexity: test on scenes with clutter or moving background; report drop in keypoint visibility and corresponding accuracy changes.

- Sensor fusion impact: when adding external cues (e.g., depth, inertial cues), quantify gains in stability and accuracy; document diminishing returns beyond a threshold.

- Data quality and health indicators

- Missing-data rate: track frames with occluded or undetected keypoints; keep under 2–5% in controlled settings, higher thresholds acceptable in challenging scenes.

- Noise floor: monitor jitter in low-contrast regions; apply smoothing only after confirming a real error floor rather than filtering away useful detail.

- Sensors and tools health: log calibration status, frame rate, and processing load; alert when any metric drops below predefined reliability targets.

- Physiological alignment and realism checks

- Health and mobility cues: verify that limb lengths and joint limits stay within plausible human ranges; flag anatomically implausible poses for manual inspection.

- Force-consistency proxies: compare inferred joint forces or contact plausibility against known activity patterns; highlight scenes where force estimates appear inconsistent with motion.

- Validation workflow and feedback

- Ground-truth pairing: build a lightweight validation set using live-action clips with clear ground-truth references; update thresholds after every 5–10 projects.

- Team feedback loop: gather detailed notes from animators and TDs (technicians) after reviews; aggregate issues by type (occlusion, fast motion, unusual poses) to guide targeted refinements.

- Iteration cadence: run a short cycle weekly, focusing on the most frequent failure modes first; document improvements and remaining gaps in a living checklist.

- Practical checks by scene and actor

- Scene variety: include actions around walking, jumping, bending, and climbing; track whether accuracy holds across transitions between actions.

- Actor diversity: test with performers of different heights, body types, and mobility levels; adjust models to reduce biases in landmark placement and pose interpretation.

- Fully automated dashboards: implement dashboards showing per-scene metrics, per-actor trends, and setup health; enable anyone on the team to spot regressions quickly.

- Process and implementation tips

- After-session review: hold short debriefs to compare numerical results with visual feedback from vision-based previews and live-action references.

- Documentation: keep a detailed log of setups, tool versions and calibration steps so teams around a project can reproduce results.

- Flexibility: design checks to accommodate new scenes, gear, or datasets; preserve a scalable framework that grows with your AI-powered workflows.

- Actionable thresholds: define concrete pass/fail criteria for each metric; avoid vague targets to make tuning focused and measurable.

Supporting elements: ensure clear visibility into scenes, poses and timing; provide actionable feedback to editors and animators via concise notes and numeric traces; maintain a healthy workflow around data quality, calibration and model updates; thanks to this structured approach, everyone involved gains a reliable, transparent path to improved realism and believable movement without cumbersome instrumentation.

Простий AI Motion Capture – Не потрібен костюм для реалістичних анімацій" >

Простий AI Motion Capture – Не потрібен костюм для реалістичних анімацій" >