Рекомендация: Развернуть a комбинация где ИИ осуществляет быструю сортировку данных и выявление закономерностей, а управление со стороны профессионалов проверяет результаты. Команды следуют руководствам, чтобы результаты accurate and эффективный; оно также adds слой ответственности.

Реальное использование включает балансировка скорости и контекста. ИИ превосходно справляется с обработкой миллионов точек данных, в то время как лица, принимающие решения, сопереживать с учетом проблем заинтересованных сторон и обеспечение соответствия решений ценностям. Процесс дает richer след оправданий и бесценный записи об управлении, посредством сотрудничества с надзорными органами и/или автоматическими проверками.

Конкретные шаги и показатели: стремитесь к автоматизации 60–70% рутинной обработки данных; резервируйте 30–40% для лиц, принимающих решения в областях с высокими ставками. Измерьте... conversion оценивать данные от исходных входных данных до готовых к принятию решений выходных данных и отслеживать точность непрерывное совершенствование после каждой итерации. Это function улучшает рабочий процесс принятия решений, в то время как done результаты становятся многократно используемыми элементами для them чтобы направлять будущую работу. Специалисты могут follow обновления и сопереживать с потребностями предметной области, и adds добавляет ценный контекст в систему.

В конечном счете, этот подход действительно способен развиваться вместе с обновлениями управления. Он помогает команды остаются в соответствии с нормами и гибкими. adds resilience, и обеспечивает подотчетность за счет документирования обоснования каждого решения в практичном журнале, который можно будет использовать для обучения и аудитов.

Скорость и масштаб принятия решений: где ИИ превосходит человеческое суждение

Разверните информационную панель для принятия решений с помощью ИИ для быстрой сортировки: направляйте задачи через автоматический анализ с использованием данных в реальном времени, а затем требуйте краткой обоснованной проверки врачами перед принятием решений о лечении. Этот подход сокращает время цикла, снижает утомляемость и поддерживает более безопасные результаты для пациентов в медицинских учреждениях.

Scale relies on parallel pipelines: feed inputs to specialized models, aggregate scores from a single board, then escalate when confidence dips. Advances in language processing and structured data handling enable rapid analysis and diagnosed patterns, with recommended actions across tasks and departments.

В сложных случаях применяйте предопределенные пороговые значения: при низкой уверенности запросите у клинициста просмотр и решение. Анализ должен включать краткое обоснование и возможные методы лечения, чтобы рецензент мог ясно мыслить и определить наилучший курс действий.

В здравоохранении рутинный скрининг, мониторинг и документирование могут быть выполнены системой, в то время как клиницисты сосредоточены на персонализированном уходе за пациентами и информированном согласии. Это сокращает время до лечения, повышает согласованность и снижает утомляемость среди занятых команд.

Стратегии защиты должны включать: непрерывный мониторинг показателей эффективности, журналы аудита и языковой слой, который четко общается с пациентами и персоналом. Если риск высок или данные вызывают подозрения, процесс должен по умолчанию переходить к просмотру клиническим специалистом и документированному обоснованию.

Измерение пропускной способности: время вывода AI по сравнению со временем отклика человека в реальных сценариях

Применяйте подход к тестированию, ориентированный на конкретную задачу: измеряйте пропускную способность как количество задач, выполненных в секунду, с разделением по сложности, и разрабатывайте рабочие процессы, в которых скорости вывода данных (inference) охватывают быстрые решения, в то время как операторы решают сложные проблемы, используя интуицию. Разрабатывайте целевые показатели для каждого сценария и соответствующим образом согласовывайте логистику.

Создайте реальный тестовый набор: 1000 задач, взятых из рабочих процессов сервисов, включая консультационные заметки для фермеров, описания продуктов для бренда и обновления расписания в логистике. Записывайте время до первого действия и общее время выполнения задачи; рассчитывайте производительность как количество задач в час и отслеживайте 95-й процентиль, чтобы выявить неэффективность. Включите проверки точности путем сравнения результатов с ожидаемыми значениями «истины в данных». При прогнозировании задач отслеживайте производительность прогнозирования и то, как она дополняет операторов, помогая командам решать следующие действия.

Бенчмаркинг по классам: быстрые ответы примерно 100 мс или менее, рутинные обновления в диапазоне 200–500 мс и более глубокий анализ в диапазоне 1–3 с. Для каждого класса отслеживайте дисперсию и определяйте, где машинный подход обеспечивает поразительную скорость, в то время как специалисты в цикле имеют решающее значение для граничных случаев, требующих нюансов, этики или экспертной интуиции. Отслеживайте описания решений для повышения объяснимости и доверия.

Чтобы снизить неэффективность и трение, используйте кэширование для общих запросов, группируйте находящиеся в обработке элементы и применяйте асинхронные очереди. Принимайте решения о маршрутизации с использованием шлюзов уверенности: если система уверена, предлагайте быстрый ответ; если неопределенность высока, передайте задачу операторам, которые могут рассуждать, используя неявные знания и интуитивные линии рассуждений. Поддерживайте ручную проверку для помеченных случаев и уточняйте предварительные правила, чтобы сотрудничество оставалось тесным, а стратегия — уважаемой.

На практике, измерение должно быть совместным: модель и команда работают вместе, чтобы выявить узкие места, улучшить описания и согласовать их с реальными потребностями в различных сервисах, от полевых консультаций для фермеров до взаимодействия клиентов с брендом. В результате получается четкая картина потенциала, показывающая, где есть быстрые победы, а где более глубокий анализ стоит затраченных времени и усилий. Никогда не полагайтесь только на автоматизацию для принятия важных решений; используйте данные для разработки стратегии, которая поддерживает рабочие места и укрепляет доверие к бренду, одновременно поддерживая фермеров и других заинтересованных лиц.

Обработка больших объемов данных: использование ИИ для выявления действенных закономерностей

Рекомендация: Разверните масштабируемый процесс выявления закономерностей, который собирает данные из CRM, журналов, телеметрии и внешних источников на вычислительном кластере, а затем предоставляет от 5 до 8 действенных закономерностей в час для быстрого принятия решений. Эта модель доставки повышает гибкость, позволяет командам сосредоточиться на действиях с высокой добавленной стоимостью и помогает им обрабатывать огромные объемы данных.

Обнаружение закономерностей использует комбинацию неконтролируемого кластеризации, обнаружения аномалий во временных рядах и анализа межканальной корреляции для выявления закономерностей, соответствующих целям продаж, результатам оказания услуг и сигналам риска. Каждая закономерность должна быть распознана и сопоставлена с конкретным действием; команды должны распознавать закономерности на ранней стадии и назначать ответственных, с определением порогов для быстрого оповещения.

Обработка и предоставление данных: разбивайте потоки данных на временные окна длиной 5–15 минут для получения быстрой обратной связи; контролируйте доступ к данным с помощью ролевой модели и маскировки данных; используйте хранилище признаков, чтобы поддерживать согласованность сигналов между моделями, обеспечивая вклад как структурированных, так и неструктурированных данных (текстов, заметок, переписок) для более глубоких и взаимодополняющих идей.

Возможность действий и интеграция: предоставьте информационные панели, автоматические оповещения и экспортные отчеты для отделов продаж и обслуживания; план должен включать интеграцию с CRM, системами обработки заявок и платформами доставки, чтобы инсайты стали частью повседневной работы. Это не замена квалифицированным специалистам; это расширяет возможности принятия решений за счет более быстрого распознавания закономерностей.

Планирование и управление: внедрить шестинедельный спринт для разгона, за которым последуют ежемесячные обзоры; определить вехи плана и показатели успеха: быстрое получение информации, точность выявленных закономерностей и улучшение ключевых результатов; корректировать источники данных и функции в зависимости от производительности; поддерживать качество и конфиденциальность данных.

Рекомендации по работе: поддерживайте модульную структуру; используйте выборку подходящего размера, чтобы сбалансировать нагрузку и объем данных; внедряйте непрерывный мониторинг смещения; устанавливайте ограничения, чтобы избежать ложных срабатываний; убедитесь, что команды взаимодействуют с результатами для проверки релевантности и применимости, помогая им быстро ориентироваться в сложных данных.

Примеры и результаты: в контексте B2B аналитики распознают закономерности, которые выявляют болевые точки клиентов; в сфере услуг закономерности выявляют повторяющиеся причины сбоев; благодаря этим сигналам команды могут переходить к целевым улучшениям и стратегиям взаимодействия; результаты включают более быстрые циклы принятия решений, улучшение конверсии и более точное таргетирование.

Согласованность на длительных этапах: автоматизация повторяющихся задач принятия решений без отклонений

Внедрите автоматизацию, учитывающую дрейф, с мониторингом в реальном времени и средствами контроля; сочетайте автоматизированные решения с периодическими проверками сотрудниками для обнаружения выбросов, чтобы поддерживать соответствие результатов бизнес-ценностям, снижать утомляемость и обеспечивать критически важные, надежные результаты в масштабе.

Способы поддержания согласованности в течение длительных циклов полностью зависят от описаний, определяющих намерения задачи, объединения правил, которые можно усреднить по ансамблю, и тестов, вдохновленных Тьюрингом, которые сравнивают автоматические метки с экспертными данными. Здесь, думайте об информации, полученной из прошлых результатов, и выявляйте тонкости в контексте задач, с правильными ограничениями, чтобы избежать ошибок и сохранить стабильность системы. Мы предлагаем вести журнал миллиона решений, чтобы добиться успеха в точности и предоставить полезные, широко применимые рекомендации своим командам. При наличии дисциплинированных ограничений производительность улучшается вскоре.

Для надежной развертки установите четырехслойный цикл: описывайте задачи точными описаниями; отслеживайте индикаторы дрейфа и сигналы усталости; реализуйте ансамбль, который голосует за результаты и инициирует эскалацию для результатов, выходящих за пределы допустимого диапазона; документируйте результаты, чтобы проявлять эмпатию к заинтересованным сторонам и извлекать уроки из прошлой производительности. Настаивайте на периодической перекалибровке с использованием небольшого набора маркированных результатов и предоставляйте сотрудникам целевую подготовку, чтобы снизить риск безработицы, сохраняя при этом незаменимый надзор. Это дает ощутимый результат для операций.

| Метрика | Что измерять | Guardrail / Action | Частота | Владелец |

|---|---|---|---|---|

| Скорость дрейфа | % outputs diverging from gold standard | Флаг; эскалация для проверок с участием сотрудников. | В режиме реального времени | ML Ops |

| Прослеживаемость | Отслеживаемость решений | Описательные логи; описания поддерживаются | Ежедневно | Соблюдение |

| Индикаторы усталости | Аномалии времени выполнения; частота отклонений | Ограничить длину последовательности; ротировать задачи | Ежечасно | Ops |

| Смягчение рисков безработицы | Прогресс переквалификации; перераспределение персонала | Сохраняйте незаменимые роли; предоставляйте обучение | Квартальный | HR / Лидерство |

| Влияние на производительность | Скорость и точность | Ограничители обеспечивают правильный выбор. | Еженедельно | Руководители команд |

Оценка неопределенности: когда показатели уверенности ИИ влияют на операционные решения

Вместо того, чтобы полагаться только на оценки, установите откалиброванные пороги уверенности и направляйте неопределенные случаи на проверку специалистом для подтверждения, гарантируя, что автоматические действия соответствуют толерантности к риску в здравоохранении и других критических областях.

Избегайте чрезмерной автоматизации в задачах, критически важных для безопасности; используйте поэтапную автоматизацию и четкие передачи управления.

Реализуйте трехзвенную рабочую схему, предназначенную для обеспечения согласованности между автоматизированными результатами и эксперским контролем, позволяющую оперативно действовать в безопасных ситуациях и проводить взвешенную проверку при наличии неопределенности.

- Высокая уверенность (пример порогов: ≥ 0,85): автоматическое выполнение рутинных задач с аудируемым журналом и встроенными проверками для предотвращения каскадных ошибок.

- Умеренная уверенность (0,65–0,85): требуется подтверждение пользователем перед окончательным принятием решений; пользователь проверяет контекст, качество данных и потенциальные последствия.

- Низкая уверенность (< 0.65): эскалировать ответственному лицу для повторной оценки, прогнозируя влияние и возможность пересмотра.

Эти рекомендации помогают управлять рисками, используя масштаб автоматизированной обработки. Преимущества включают повышенную пропускную способность, снижение нагрузки в условиях высокой загруженности и более стабильную работу при выполнении задач. Баланс между автоматизацией и экспертными знаниями имеет решающее значение, особенно когда паттерны меняются в наборах данных или когортах пациентов.

Для реализации необходимо внедрить процедуры калибровки и мониторинга:

- Используйте диаграммы надёжности и оценки Бриера для оценки калибровки; отслеживайте согласованность оценок во времени и на разных срезах данных для обнаружения дрейфа.

- Анализировать паттерны рассогласования: переоценка редко возникающих событий, недооценка рутинных случаев и сдвиги после обновления данных; соответствующим образом адаптировать пороговые значения.

- Ведение подробных журналов, описывающих прогнозы, уверенность, предпринятые действия и участвующего пользователя или лица, принимающего решение, необходимо для обеспечения отчетности и последующего анализа.

- В здравоохранении необходимо соответствие клиническим рекомендациям и опыту; убедитесь, что автоматизированные процессы соответствуют руководствам по безопасности пациентов и создают предсказуемый пользовательский опыт.

Эти шаги позволяют организациям лучше прогнозировать результаты, упрощать цепочку принятия решений и создавать надежную структуру, которая масштабируется вместе с объемом данных. После анализа рисков команды могут выстроить прозрачную систему, которая облегчает людям доверие и аудит решений ИИ, сохраняя при этом ответственность за значимые действия.

Отслеживайте точность прогнозирования с течением времени и между когортами, чтобы выявлять дрейф и быстро перенастраивать.

Смещение, справедливость и интерпретируемость: практические сравнения с человеческим суждением

Рекомендация: внедрите формальный аудит предвзятости и интерпретируемости перед любым развертыванием, используя метрики предвзятости в прогнозах по различным масштабам; требуйте ручную проверку для операций, связанных с высокими ставками, и предоставляйте четкое объяснение для решений в инструментах, ориентированных на пользователя, что, безусловно, повысит доверие и подотчетность.

Измерьте разницу между результатами работы модели и тем, как лица, принимающие решения, воспринимают риск в различных сценариях, а также отслеживайте итоговые результаты на последнем этапе. Опубликуйте информационную заметку о прозрачности, которая связывает входные данные с результатами и четко указывает на возможные источники предвзятости. Используйте единый, широко принятый стандарт для сравнения производительности в различных областях, таких как финансы, транспорт и операции поддержки клиентов; применяйте это к транспортным средствам, когда это уместно.

Чтобы уменьшить расхождения, внедрите рабочие процессы запроса обоснований и объедините интерпретируемость с управлением: обеспечьте соответствие основным ценностям, предусмотрите возможность руческого переопределения и предоставляйте сотрудникам регулярные обновления о работе по обеспечению справедливости. В задачах управления изображениями подсказки в стиле Midjourney показывают, как кадрирование формирует восприятие, подчёркивая прозрачность в путях принятия решений.

Практические шаги для расширения развертывания: поддерживайте единый источник истины для функций и меток; публикуйте карточки моделей с описанием области применения, источников данных и показателей производительности по группам; требуйте одобрения директоров или совета директоров для изменений, влияющих на риск; внедряйте регулярные проверки различий и повторную калибровку; предоставляйте интерпретируемые результаты, чтобы пользователи могли воспринимать обоснование; поддерживайте четкие политики обмена данными для данных сотрудников и данных клиентов; обеспечивайте доступность отчетности через новостные сводки; проектируйте средства управления автоматизированными системами, используемыми в транспортных средствах и других операциях; включайте путь ручного просмотра для пограничных случаев и цикл обратной связи с заинтересованными сторонами. Это не заменяет надзор со стороны лиц, принимающих решения, но укрепляет подотчетность и согласованность между командами.

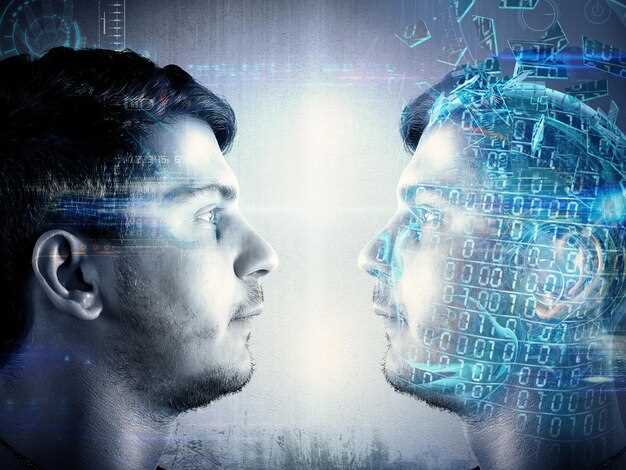

AI против человеческого интеллекта — как ИИ сопоставим с человеческим суждением" >

AI против человеческого интеллекта — как ИИ сопоставим с человеческим суждением" >