Comece com o consentimento explícito de adesão para qualquer material que entre em canais públicos e exija uma aprovação documentada do criador no registo de produção. Isto protege os humanos, mantém as campanhas apelativas e revela oportunidades enquanto gere o risco. O sequenciamento começa com divulgações claras, direitos verificáveis e proteções que se aplicam em todas as plataformas.

Equilibre a inovação com a responsabilização através da rotulagem de contribuições sintéticas e do armazenamento de registos. Utilize um transparent trilho de consentimento e um loja de etiquetas fluxo de trabalho para rastrear a atribuição; esta abordagem preserva melhor Práticas para ambos generation e produção. Um teste prático com camera e feeds e uma comparação incisiva mostra se os outputs imitam ativos reais ou se desviam da autenticidade, ajudando a manter a confiança.

Passe do medo a uma melhor tomada de decisões, delineando cada fator de risco e, então, suggest limiares de proteção: divulgação inicial, limites na narração e consentimento explícito para cada plataforma. Envolver uma comunidade de criadores para fornecer feedback; os humanos permanecem centrais para o controlo de qualidade, garantindo que os ativos produzidos por algoritmos aumentam, e não substituem, as vozes autênticas. Este limiar de proteção permanece essencial à medida que os canais evoluem.

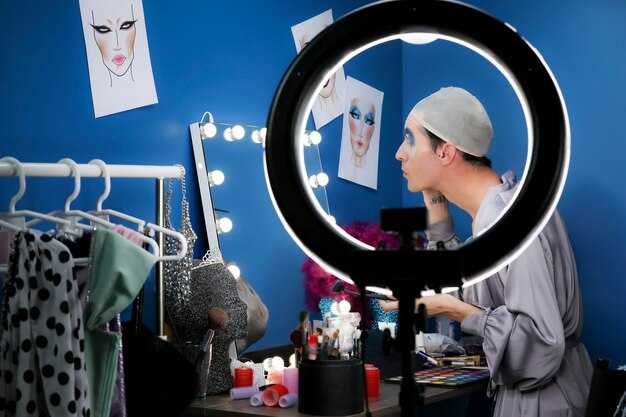

Para escalar de forma responsável, utilize sophisticated analisar pipelines e melhor práticas que mantêm a intenção criativa alinhada com a voz da marca. Esta abordagem já foi comprovada em vários programas piloto, permitindo generation em escala, preservando o toque humano; o objetivo é equilibrar eficiência com autenticidade. Quando as equipas de produção experimentam, devem preservar um camera-ciclo de feedback para o criador, evitando truques que possam implicar endosso. Se um futuro loja de etiquetas funcionalidade surgir, use-a para registar a proveniência e permitir ajustes pós-lançamento, aumentando ainda mais a confiança.

Framework Ético Prático para Conteúdo Gerado por IA em Campanhas de Marca

Exigir consentimento explícito para cada testemunho gerado por IA e rotular os resultados de forma clara para manter a confiança. Este passo inicial reduz o risco de deturpação à medida que as campanhas se movem entre setores. A rotulagem sensível a custos ajuda as partes interessadas a manterem-se alinhadas.

Analisar a proveniência dos dados de cada ativo, detalhando as fontes de dados, permissões e qualquer origem sintética. A clareza aqui impede o enviesamento, garante uma utilização responsável e apoia auditorias pós-lançamento. data-driven as métricas tornam-se a base para a otimização.

Identifique o conteúdo como gerado por IA em legendas, miniaturas e adaptações linguísticas multilingues, especialmente quando estiverem envolvidos estímulos gerados pelo utilizador. Esta prática mantém-se transparente em todos os mercados e reduz a confusão dos consumidores.

Use a supervisão humana para rever todos os recursos antes do lançamento, com foco na precisão, consentimento e segurança da marca, incluindo visuais, testemunhos e tom de linguagem. Feito corretamente, isto assegura o alinhamento com os valores e evita o desvio. Isto ajuda as partes interessadas a manterem-se informadas.

Limitar a síntese facial a casos de uso não identificativos ou avatares personalizados que sejam claramente ficcionais, evitando semelhanças com indivíduos reais, a menos que exista consentimento verificado. Isto reduz o risco de atribuição incorreta e protege a privacidade.

Controle os custos através de um lançamento faseado: comece com um leque de formatos (imagens, pequenos clipes e recursos baseados em texto) e compare o desempenho com uma linha de base tradicional. Procure um equilíbrio perfeito entre eficiência e confiança.

Personalize o conteúdo por idioma, cultura e segmentos de público para aumentar a ressonância sem comprometer a segurança, especialmente em setores sensíveis. Utilize sugestões generativas que reflitam normas locais e evitem estereótipos. Que pareça autêntico.

Adote uma abordagem mista com elementos tradicionais e gerados por IA quando apropriado; isto mantém-se familiar para o público, permitindo ao mesmo tempo a experimentação com novos formatos. Este equilíbrio ajuda as campanhas a manterem-se credíveis e apelativas.

O lançamento de campanhas exige testes faseados: executar pequenos pilotos, analisar tempos de resposta e iterar antes da implementação em larga escala. Use uma *loop* de *feedback* orientada por dados para refinar *prompts* e recursos.

Estabelecer uma governação com métricas mensuráveis: impressões, interação, sentimento e conversão, acrescido de dados de custo ao nível do ativo e tempo de lançamento. Revisões regulares mantêm a ética no centro à medida que os resultados aumentam.

Utilizar medidas de proteção para a síntese facial e vocal: garantir as mesmas restrições de semelhança, evitar riscos de deepfake e recorrer a imagens não identificadoras ou recursos licenciados, utilizando plataformas como a Heygen com cautela. Isto reduz o risco de reputação, permitindo simultaneamente a experimentação criativa.

Documentação e responsabilização: manter um manual de instruções específico do setor, atualizá-lo com novas lições e exigir auditorias trimestrais do conteúdo gerado em todas as campanhas. Os registos de proveniência de dados, os registos de consentimento e o controlo de versões apoiam a governação contínua.

Esclarecer Direitos e Consentimento para UGC Processado por IA

Exigir consentimento explícito e por escrito dos participantes antes do processamento por IA de conteúdo gerado pelo utilizador e registar as aprovações num fluxo de trabalho centralizado. Esta abordagem tem ressonância junto de criadores e públicos, cumprindo as normas de transparência necessárias.

Definir termos de propriedade: licenças, não transferências, especificar se plataformas ou parceiros podem usar locuções, vídeos ou histórias criadas em vários canais, por um período definido, e garantir direitos de revogação quando os criadores retirarem o consentimento. O uso da criação deve ser descrito claramente nas licenças em todas as plataformas.

Adotar uma abordagem clara de registo de consentimento que vincule cada ativo a um ponto de contacto, preserve a proveniência com indicador de fonte e registe os limites de utilização preferenciais para que os criadores possam ver como o seu material flui através do processamento gerado por IA e da distribuição entre plataformas.

Quando o rohan partilha histórias genuínas, o consentimento deve abranger a representação, incluindo vozes e contextos; as divulgações devem acompanhar os resultados gerados por IA para evitar interpretações erradas e proteger o público, garantindo que a mensagem ecoe junto do público, evitando ao mesmo tempo alegações excessivamente sensacionalistas; ajustar as locuções e a estética para refletir a intenção original, criando experiências envolventes, impactantes e autênticas.

Implementar um fluxo de trabalho baseado em permissões que suporte revogação, versionamento e registos de auditoria; incluir verificações para que vídeos ou outros recursos não sejam reaproveitados para além do ponto acordado e fornecer notificações aos participantes quando forem necessários ajustes, permitindo que os criadores revejam as alterações antes da publicação. As políticas devem permitir que os criadores retirem o consentimento rapidamente.

Educar equipas e criadores sobre direitos, consentimento e obrigações, ponderar possíveis interpretações erróneas e oferecer orientação prática para decisões justas, mapeando a proveniência das fontes e mantendo uma voz transparente em todos os canais, garantindo que o envolvimento permaneça genuíno, protegendo simultaneamente participantes e audiências.

Divulgue o Envolvimento da IA e a Origem do Conteúdo ao Público

Sempre divulgar o envolvimento da IA e a origem dos conteúdos ao público em geral Translation not available or invalid., mensagens, e imagens. Esta prática reforça a credibilidade, apoia understanding, e evita interpretações erradas sobre origem e autoria.

Incorporar de forma concisa script para declarar entrada sintética e atrás Aqui está a tradução: content, with visible loja de etiquetas referências e outras fontes, looking Aqui estão as regras para o tradutor profissional: - Forneça APENAS a tradução, sem explicações - Mantenha o tom e o estilo original - Mantenha a formatação e as quebras de linha - Para contexto sem adivinhação.

Recente as diretrizes enfatizam medição impacto de divulgações; monitorizar o envolvimento, understanding, e confiar utilizando Translation not available or invalid. análises e inquéritos rápidos. Isto mantém o público sempre informado sobre origens, ajudando marketing as decisões fazem sentido.

Criar governação na fase de desenvolvimento ajuda a preservar voz genuína atrás created Regras: - Forneça APENAS a tradução, sem explicações - Mantenha o tom e o estilo originais - Mantenha a formatação e as quebras de linha originais Translation not available or invalid. e imagens, enquanto escalonamento sintético workflows. andy fornece verificações para verificar descoberta e ajustar script para manter a clareza; as equipas devem produzir Atualizações transparentes.

Alavancagem a transparência suporta a confiança e permite escalonamento de sintético comentários. O campo "assunto" DEVE conter **apenas** o texto traduzido. --- Hi team, Hope you're all doing well. I'm writing to inform you about a crucial update regarding our upcoming marketing campaign, "Project Phoenix". After careful consideration and analysis of the current market trends, we've decided to postpone the launch date by two weeks. The new launch date is now scheduled for October 26th, 2023. This decision was not taken lightly. We believe that this delay will allow us to fine-tune our strategy and ensure a more impactful and successful campaign launch. Here’s what this postponement means for you: * Revised Timeline: Please update your project timelines to reflect the new launch date. * Content Refinement: This provides an opportunity to refine existing content and explore new creative angles. * Extended Preparation: Utilize this extra time to further prepare and optimize your respective areas of responsibility. I understand that this change may require adjustments to your schedules, and I apologize for any inconvenience it may cause. Your dedication and hard work are greatly appreciated. Please don't hesitate to reach out to [Name] or [Name] if you have any questions or concerns. Thanks, [Your Name] loja de etiquetas registos. À procura de mudanças no comportamento do público sem ambiguidade, eles pode verificar resultados em todos os painéis. Se as divulgações falharem, o conteúdo emite sinais enganosos. Sem prometer demasiado, forneça informações acionáveis impacto que informa o envolvimento contínuo.

Definir Normas de Conteúdo para Segurança, Precisão e Respeito

Publique uma carta de princípios em poucas horas que estabeleça segurança, precisão e respeito, e partilhe-a de forma transparente com clientes e utilizadores.

Pensar em termos de conjuntos de setores e percursos de utilizador; encontrar gatilhos concretos; obter feedback de utilizadores dispostos; as diretrizes finais devem abranger dados faciais, expressões programadas e histórias com carga emocional; criar diretrizes fáceis de auditar e iterar a cada ciclo de feedback.

As regras básicas para criadores de conteúdo incluem evitar manipulação, verificar factos e rotular claramente qualquer material sintético ou proveniente de outras fontes; garantir que as pistas de persona ou expressões faciais se mantêm inequívocas; todas as contribuições são capturadas, registadas com data e hora e armazenadas em registos источник para auditoria.

| Aspecto | Rails de proteção | Métricas | Responsabilidade | источник |

|---|---|---|---|---|

| Segurança | Sem ódio, violência, doxxing; sem dados biométricos; consentimento registado; avisos legais para qualquer uso de dados faciais; evitar enganos planeados. | Taxa de sinalização; falsos positivos; tempo de reação | Equipa de moderação | documento de política |

| Accuracy | Requerer citações; verificar alegações; rotular claramente material gerado pelo utilizador ou de fonte externa | Taxa de alegações não verificadas; cobertura de citações; atas da revisão | Redação; equipa de dados | auditoria de origem |

| Respeito | Linguagem inclusiva; sem estereótipos; vozes diversas; respeito pelos contextos emocionais | Sentimento do utilizador; contagem de reclamações; tempos de escalonamento | Criadores de conteúdo; gestores de comunidade | Carta da Comunidade |

Estabelecer Fluxos de Trabalho Transparentes de Revisão, Aprovação e Controlo de Versões

Configure ciclos de revisão centralizados e auditáveis que capturem prompts de entrada, escolhas de modelos e saídas finais. Os cargos incluem criador de conteúdo, revisor, aprovador; os stakeholders envolvidos incluem responsáveis jurídicos, de compliance, educacionais e uma pequena equipa. Uma única fonte de verdade permite registos de auditoria consistentes em todos os ativos.

- Política de Versionamento

- Adote o versionamento semântico (v1.0, v1.1, …); cada recurso tem um histórico através de entradas de registo de alterações e nomes de ficheiros determinísticos.

- Os campos de metadados incluem: criador, prompts, geradores com tecnologia de IA utilizados (exemplo: heygen), definições do modelo, hora, atores creditados e estado.

- Mecânica de workflow

- Atribuir uma sequência clara: criador de conteúdo → revisor → aprovador; definir metas de tempo de revisão para suportar a escala.

- Capture notas dos revisores, razões para rejeição e alterações sugeridas para auxiliar trabalhos futuros; etiquete os recursos com um veredito (aprovado, requer reformulação ou arquivado).

- outro caminho pode levar a uma análise mais rápida com regras de escalonamento mais céleres.

- Verificações mais rigorosas podem atrasar o ciclo; ajuste em conformidade para manter o equilíbrio entre a velocidade e a minuciosidade.

- Divulgação, autenticidade e mensagens

- Anexar divulgações visíveis que indiquem que os conteúdos são gerados por IA; garantir que a mensagem permanece fidedigna e alinhada com as expectativas do público.

- Quando os recursos se tornam parte de campanhas, inclua um rodapé de divulgação que explique o processo de geração sem comprometer a clareza.

- Para ativos já publicados, aplicar divulgações e correções atualizadas como parte da governação contínua.

- Controlos de qualidade e análise

- Implemente uma lista de verificação de riscos para sinalizar representações excessivamente realistas ou pistas enganosas; utilize rotinas de análise para identificar potenciais deturpações.

- Mantenha uma camada educacional para os membros da tripulação; partilhe regularmente as melhores práticas e erros comuns.

- Auditoria, custo e governação periférica

- Monitorizar o custo por ativo e o gasto global à medida que o volume de conteúdo aumenta; equilibrar a velocidade com a precisão para evitar custos inflacionados.

- Manter a atenção a casos extremos: se surgirem atores ou personas, exigir as devidas divulgações e registos de consentimento; manter registos acessíveis para auditorias.

- Educação, cultura e padrões

- o andy pode sugerir revisões trimestrais de governação; fazer formação sobre consentimento, autenticidade e mensagens.

- Inclua notas informativas educativas que expliquem políticas, cenários e critérios de decisão; incentive o feedback dos funcionários envolvidos.

Implementar a Mitigação de Vieses e a Representação Inclusiva

Auditar fontes de dados para garantir uma representação equilibrada entre dados demográficos, contextos e estilos; mapear sinais de diversas comunidades, ambientes e idiomas, evitando perder suficientes "heygens" que enviesem a narrativa para uma única perspetiva. Acertar em todos os segmentos de público e garantir que o estilo se mantém fiel às experiências vividas.

Estabeleça um protocolo de mitigação de viés construído em torno de três pilares: prompts inclusivos, grupos diversificados de criadores e avaliação transparente. Adote proteções de estilo UGC para manter os resultados alinhados com contextos do mundo real, criatividade e expectativas do público; especialistas confirmam que esta abordagem reduz o desvio. Os prompts são concebidos para a inclusão, o que ajuda a prevenir resultados enviesados. As revisões da equipa vermelha devem sinalizar lacunas persistentes. Os apoiantes destacam um modelo de risco sofisticado.

Crie um conjunto de métricas com indicadores de paridade, preocupações e resultados; acompanhe os resultados por tarefa e região; utilize dados de câmaras, vídeos e variações de conteúdo para elucidar pontos cegos.

Implementar um framework de experimentação controlada para minimizar imitações e estereótipos; embora imperfeito, prompts iterativos e ajustes post-hoc ajudam a reduzir o viés.

Plano de escalabilidade: reunir um portfólio de variações entre estilos, configurações e públicos; armazenar num conjunto modular de recursos criados; garantir que os resultados são replicáveis e transparentemente documentados. Continuar a criar novos recursos através de fluxos de trabalho modulares.

Monitorize a Conformidade e Corrija Problemas com Auditorias em Tempo Real

Ative auditorias em tempo real para assinalar violações de políticas em segundos e autorremediar quando necessário; isto otimizará as aprovações, protegerá os clientes e reduzirá os riscos em todas as campanhas. Além disso, uma camada de monitorização centralizada deverá manter uma visão em direto de ativos e submissões do tipo UGC, garantindo verificações consistentes em toda a produção e canais externos.

Ingira feeds de sistemas de produção, filas de moderação, submissões de criadores e tickets de reclamações para que as auditorias possam analisar o conteúdo no contexto em que as violações colocam os utilizadores em perigo. Use etiquetas e metadados para classificar os itens por categoria, risco e toque, depois acione regras de remediação automaticamente, mantendo o alinhamento com a mesma linha de base de política entre as equipas.

Para escalar, implemente verificações que se aplicam a campanhas, clientes e canais; isto garante os mesmos padrões ao lidar com material do tipo UGC em grande escala. Utilize modelos ou recursos do tipo UGC para testar regras e verificar se os sinais de risco se alinham com a estratégia. Outro aspeto fundamental é rastrear onde ocorrem falhas, para que a correção possa ter como alvo os pontos de contacto que mais necessitam.

Os dashboards em tempo real devem apresentar métricas como a taxa de conformidade, o tempo de correção e os riscos residuais; os analistas podem analisar tendências, manter um registo de auditoria e fornecer contacto direto com as equipas internas. Incluir também o escalonamento automatizado para os responsáveis pela produção quando uma violação é confirmada, mantendo assim a responsabilização multifuncional.

Com estas práticas, a eficiência aumenta, a escalabilidade melhora e os ativos mantêm-se consistentes entre clientes e campanhas; os riscos tornam-se geríveis em vez de disruptivos, permitindo às equipas manter uma cadência constante de produção de conteúdo de utilizador em conformidade e em escala.

A Ascensão do UGC Gerado por IA – Como as Marcas Podem Aproveitá-lo de Forma Ética" >

A Ascensão do UGC Gerado por IA – Como as Marcas Podem Aproveitá-lo de Forma Ética" >