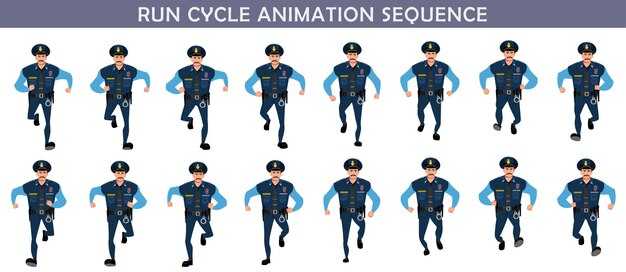

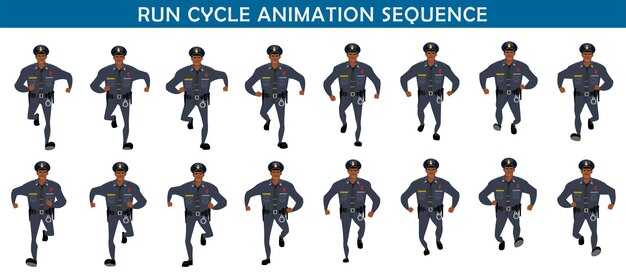

Recommendation: switch on auto retargeting to map movement data from a single camera rig onto your avatar within minutes, skipping manual rigging and enabling instant previews. This approach is designed to be 접근 가능한 for solo artists and explainers in studios, keeping the workflow creative and precise, pretty reliable.

Within recent pipelines, neural tracking layers offer a premium level of accuracy, stabilizing body and limb patterns across varied lighting and occlusion. The 정보 from camera feeds is fused into a compact representation, enabling removal of jitter and aligning data across frames. This keeps the results consistent on the page and within your asset library.

Best practices call for a lightweight 2–3 view camera setup, automatic calibration at startup, and a steady rate around 60–120 frames per second. A neural fusion stage then aligns limbs and torso to produce stable poses, enabling artists to iterate quickly and tweak only when needed. This demand on teams is reduced, helping ship scenes faster.

Designed to be accessible across studios, this workflow integrates into a documentation page and into the artists’ toolchain, featuring auto-configs that reduce setup time. For premium results, save presets for different actor types and movement patterns so teams can reuse them on every project.

Step 3 Add cinematic polish

Use a markerless multi-camera setup; apply a neural cleanup pass; publish the refined footage.

Sync iphone feeds via a shared clock; limit drift to under 5 ms. Keep zoom between 1x and 2x for main frames; crossfade between angles to preserve smoothness. Target a 24-30 fps timeline to anchor movement; apply a denoise and IK smoothing pass to remove jitter while preserving details. Plan shots as a sequence: interior lighting, actors, and props in a single take when possible, else split across two to three passes for dynamic drama. In post, focus on breath moments, pauses, and line readings to elevate production value; there is room to surprise audiences by subtle tempo shifts. Aim for animated clarity in every beat.

Ensure privacy by reviewing gdpr practices for data handling; anonymize faces when publishing to social, unless consent is explicit. There are test clips named charlie in a blank scene to audition timing and pacing before final rendering. The goal: more reachable quality to create outcomes than expected by a creator, enabling production teams to publish quickly.

Leverage markerless data across a compact rig: phone-based stereo pair plus a distant unit; compute parallax, depth, and focus stacking to simulate cinema lensing. Surprising results can come from sliding the camera by a 0.5-1.0s zoom to create a cinematic sense of scale; then lock to the action to prevent drift. There, emphasize a moment by relying on a close-up to reveal texture during a dramatic beat.

| 양상 | 추천 | Rationale |

|---|---|---|

| Footage source | markerless multi-camera; iphone clips | Depth cues, parallax; smoother movement |

| 시간 | 24-30 fps; baseline zoom 1x-2x | Smooth rhythm; consistent cadence |

| Post-processing | neural cleanup; denoise; IK smoothing | Quality lift; preserves detail |

| Export & privacy | MP4/H.264; captions; metadata; gdpr compliant | Social readiness; privacy compliance |

Calibrate capture rig for cinematic look and consistent data

Start with a baked calibration pass using a checkerboard and grayscale targets to lock perspective, distortion, and color space across cameras. Record a 60-second sweep at 24 fps, shutter 1/48, ISO 400, color temperature 5600K, in RAW or linear. This baseline keeps the timeline clean and ensures tracking data remains dependable, which minimizes risk of drift in later takes.

-

Geometry and alignment: lock inter-camera offsets within 5 mm, verify pitch/yaw/roll within ±0.2°, and confirm the origin aligns with the production coordinate system. A precise baseline improves performance during generative passes and when switching to keyframing for gaps.

-

Optics and exposure: fix focal length, aperture, and sensor alignment for every camera. Use a distortion model and apply a single LUT; keep exposure within 1/3 stop across the set to avoid chroma shifts that complicate tracking.

-

Color management: pin white balance to a single target, profile color space (rec.709 or rec.2020 as appropriate), and bake a 3D LUT into the timeline. Include a neutral chocolate-gray card in the frame to stabilize reflections on glossy surfaces.

-

Lighting and surfaces: maintain consistent color temperature and light intensity; avoid flicker by syncing to the same power source. Ensure material finishes are matte where possible to keep highlight behavior predictable on screen.

-

Tracking readiness: verify marker visibility above 90% across the shot; preempt occlusion with an auxiliary marker layout if the subject moves near the set edges. Log any missing frames to guide post-processing.

Workflow notes: the rig should support a clean data stream that a creator can trust, whether you’re capturing a quiet scene or a massive crowd. Start by documenting the baseline, then evolve into a robust pipeline that leverages generative cues and early keyframing to fill gaps without breaking continuity. There’s no need to chase perfection with every take; keep a compact set of presets to limit setup time and protect the director’s timeline from disruption.

- Establish a repeatable start point for each shoot day; re-run the bake if any parameter drifts more than a threshold (e.g., 0.5% scale change or 2 px marker error).

- Run a quick test: shoot a 10-second motion sweep, then review tracking stability and color consistency on the big screen to confirm the look is captivation-ready.

- Record a brief texture pass under varied lighting to ensure the data remains clean when the scene shifts to different environments.

After calibration, the director can trust the data pipeline to deliver a consistent visual language across shots, keeping everything aligned on the timeline and reducing post mismatches. If something feels off, check for missing calibration frames, re-bake, and re-validate the geometry–the process is simple, but the payoff is massive: a screen that stays engaging and credible from start to end.

Refine movement curves using reference footage and timing

please assemble a personal library of 3–5 reference clips that reflect target styles (gaming, indie, cinematic). weve found these anchors improve every sequence, helping creators produce exciting, crisp curves that feel natural. store this full collection for reuse across projects and make iteration faster.

-

Select 2–3 anchor moments from each clip: peaks, troughs, holds. Mark exact frames to build a timing map that aligns to your project’s beat grid.

-

Create two baseline curves: one for rhythm (overall pace) and one for expressive push. Use a simple spline or Bezier interpolation to connect keyframes, and annotate each segment with ease values (ease-in, ease-out).

-

Generate a per-shot timing sheet by mapping key poses to beats per minute or rhythm cues. Keep notes on tempo shifts so editors can verify alignment quickly.

-

Refine transitions by adjusting segment lengths so each move breathes. Apply subtle ease at every transition to avoid abrupt changes that break readability. Use a moment of anticipation to cue intent.

-

Iterate using playback at 0.75x and 1.25x to reveal stiffness and rhythm drift. Update curves until cadence remains consistent across scales and devices (mobile previews are especially telling).

-

Validate on multiple targets: test on mobile and desktop, compare against references, and ensure fidelity in full-res exports. This helps maintain quality across youtube uploads and premiere cuts.

-

Organize curves by favorite styles for different genres (sports, adventure, puzzle). This expands your toolkit; teammates can reuse presets to accelerate production.

-

Gather peer feedback and note suggestions from a small group; implement changes in a compact revision cycle. Document changes so teammates understand the reasoning behind each tweak.

By using reference footage as a guide and tight timing controls, you create movement that feels intentional and believable. This approach aims to be easy to share, easy to adjust, and easy to scale across projects, from indie experiments to bigger gaming trailers, while staying aligned with quality expectations on platforms like youtube and premiere.

Stabilize data: reduce noise, jitter, and marker drift

Begin by applying a two-stage denoising: run a low-pass filter on every marker stream, then execute a Kalman smoother across the timeline to suppress high-frequency components while keeping essential movement intact.

Adopt a dynamic model that treats each marker as a rigid-body point, enforcing inter-marker constraints, which preserves lifelike trajectories and reduces jitter across sequences.

Anchor drift control: re-anchor the pelvis or root as a fixed reference, then impose soft constraints between linked markers to prevent gradual deviation across frames. frames. heres a checkpoint to verify drift handling in practice.

Automate cleaning: implement a script that runs in live or batch mode, leveraging third-party libraries for filtering, smoothing, and gap filling; baked results enter a clean dataset that can be published for visual review or films.

Validation steps: compute RMSE against a ground-truth subset, inspect residuals, and verify lifelike timing remains on the visual timeline; please flag large errors for human review and adjust parameters accordingly.

Occlusion handling: fill gaps using trajectory prediction from adjacent frames, avoid creating artifacts that alter subtle personality; risk of over-smoothing should stay under control to resolve drift without dulling nuance. Just avoid over-smoothing.

Workflow tips: document data provenance, lock reference frames, and maintain a changelog; credit those who prepared reference films, scripts, and voice notes; for generative cues, keep clear separation before publish; compare competitors’ pipelines and align outputs for advertisements; treat chocolate layers as an analogy for layering resilience; this helps videoscribes communicate process to those involved and stakeholders.

Live pipelines in production require a concise vault of tested presets: store large parameter sets as baked profiles, define target precision, and keep a visual audit trail to resolve post-release issues in films or advertisements.

Enhance shots with controlled camera shake and depth cues

Start by establishing a stable baseline and add micro-shakes on key beats. Use a small, random shake pattern: 0.3–0.8 degrees of tilt and 0.2–0.8 secs per burst, repeating every 1–2 secs. Keep total drift under 2 degrees across a shot to avoid fatigue. In a traditional setup, begin on a heavy handheld rig during tests, then translate to a lighter, studio-friendly configuration on set to save time.

Depth cues: layer foreground, mid, and background to create parallax; let the foreground move 1.2–2.0 times more than the background; vary focal length between 28mm and 50mm to emphasize depth. Use a shallow depth of field (f/2.8–f/4) to boost plane separation.

Placement and timing: anchor camera shake to on-screen events rather than a constant drift; keep a clear timing plan so secs of action drive the bursts; small changes in pace yield stronger readability.

Process and planning: load a library of 3–5 shake patterns; plan their timing around action; evaluate a few rounds using quick on-set checks; if a pattern feels stiff, instead swap to a softer curve.

Quality control: ask questions during reviews; director notes help refine; ensure the result yields legible depth cues on different platforms; a youtube short or trailer sequence shows how a controlled aesthetic translates across the industry.

Live-speed testing and learning: use detection features to flag frames where shake masks actor performance; adjust accordingly; the final product should feel animated yet natural.

Flavor and tone: treat energy like tempered chocolate–avoid heavy jolts; keep a steady ease; the result reads as purposeful and cinematic.

Closing: starting from a solid plan, you can produce results that feel global in reach; Steve combines traditional rigs, digital noise patterns, and smooth easing curves to yield a unique look.

Sync character acting with dialogue and sound design for coherence

Plan a synchronized pipeline: map dialogue timing to avatar movement using keyframing, align breath and micro-expressions from voice tracks, and load a customizable soundscape to enhance coherence.

Attach every gesture to the spoken line: the mouth, jaw, and lip shapes, plus head tilt and eye gaze, should reflect tone and pacing in an animated avatar; also animate the lips in rough passes, maintain accurate data and let the world context guide ambience.

Directors and the animator plan scenes in advance, establishing a drama arc, emotional beats, and natural pauses. A generator can auto-create initial lip cues; afterwards, refine via keyframing to produce believable moments, which improves pace and consistency.

Export formats should be consistent across platforms; thats a high bar for the team to meet, create a page-based review for quick checks, and run scale tests on devices to catch timing drift early.

Sound strategy: align voice performance with ambient design; think about breathing, overlaps, and nonverbal cues; as tension rises, the soundscape should scale accordingly, and youre approach keeps tempo aligned.

Workflow tips: load stable data, trust your team, also keep notes for future revisions. Add a tiny firefly cue in your editor to mark timing windows; this helps animators know when to push subtle head moves.

Keep their data secure: gdpr-compliant practices, audit trails, and consent records; this plan demonstrates care, like a trusted policy, and helps the animator produce reliable outputs.

Steering tip: steve can oversee asset imports, ensure format standards, and drive iterations over scenes to produce faster results.

Effortless Character Animation with High-Precision Motion Capture" >

Effortless Character Animation with High-Precision Motion Capture" >