Empfehlung: A pocket dashboard automates optimization of smooth clip changes, reducing spikes during production tasks; this setup helps animate sequences and reach study outcomes created by AI-driven experimentation.

A historical study indicates that a dashboard-first workflow reduces manual load by up to 25–30% in production pipelines, freeing time to focus on animation polish. A single interface helps management across assets, including gaming cutscenes and UI sequences, while keeping a sharp eye on optimization metrics.

Implementation tips: Start with a free trial of a compact set, test on a handful of tasks, track how automation shifts hours, and baseline historical performance to measure reach. Use a compact dashboard to keep everything in one place, manage milestones, spikes, and asset states easily, and maintain an animation-friendly environment that scales from rough drafts to finished assets.

Beyond concrete steps, focus on management practices that enhance collaboration among production teams and creative units. Such processes easily scale, turning a handful of AI-assisted edits into a stable editing rhythm that feels natural to users, while remaining free and accessible to small studios and larger setups alike.

Practical guide for selecting and implementing AI-driven transitions in Alexa-enabled video workflows

Start with a compact library of AI-driven transitions that are automatic and support customization, triggered by Alexa inputs, and compatible with existing asset formats. Build a baseline set that dynamically adapts to movement and language cues, delivering smarter viewer experiences and making workflow smoother.

Choose options that are in-house capable or cloud-augmented, ensuring recognition of inputs, capture of viewer signals for different scenes, and alignment with trends. Favor techniques that create human-like transitions while keeping latency low and without requiring heavy players or external assets.

Design a modular pipeline that easily loads assets, captures inputs, and manages posting sequences across formats. Use AI to craft animations that respond to inputs, creating movement that feels human-like.

Integrate Alexa via ASK: define intents to trigger automatic transitions, implement recognition of language cues, and enable customization from voice. Maintain an in-house component for sensitive logic while allowing cloud-augmented paths for scale, ensuring work remains responsive on devices with limited resources.

Test systematically: publish variant postings to measure viewer engagement, retention after transitions, and cross-format compatibility. Track metrics such as drop-off points and average completion time, and apply learnings to refine inputs, recognition rules, and animation techniques.

Implementation checklist: confirm existing formats compatibility, set up voice-triggered cues, assemble a small library of AI-driven transitions, enable easy customization, and design a lightweight governance process to capture feedback and iterate without disrupting assets.

Identify and compare top AI tools for transitions and their standout features

Begin with ai-powered platforms offering faster rendering, created templates, and robust techniques to deliver seamless scene changes, ideal when needing to scale production.

Key standout features include automated pattern recognition, pattern-level cues, element-aware scene changes, and enhancement options that adapt to user workflows through automation.

Global reach matters: choose solutions with community-driven feedback, a strong источник of best practices.

When evaluating, examine how each platform translates ideas into actions: how patterns emerge, how an agent automates editing, how the system maintains the order of effects. translating briefs into automated sequences remains a core capability.

Consider impact on followers and engagement: ai-powered engines can shorten production cycles, enabling longer videos or more frequent posting. This approach helps teams ship content faster.

Look at data sources and translation capabilities: where the platform translates rough cuts into polished sequences, and how it handles translating captions or subtitles. The output often becomes transformed into ready-to-publish material.

Assessment criteria include speed, stability, integration with existing work, pricing model, and the strength of community-driven templates. Include strategy alignment and the ability to scale to global projects.

Actionable guidance: map needs to a product roadmap, test with a small set of clips, and measure enhancement against baseline.

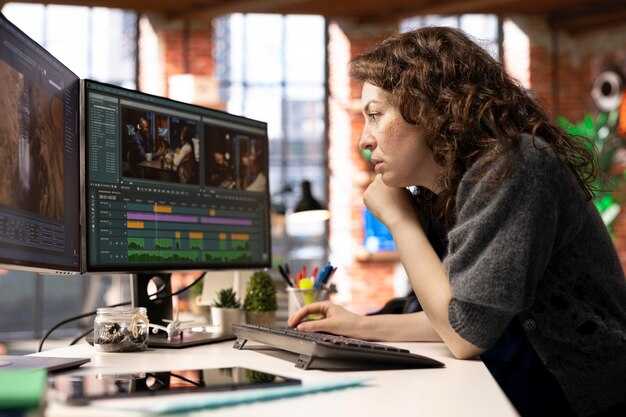

Integrate AI transitions into editing suites (Premiere Pro, After Effects, DaVinci Resolve) with Alexa voice prompts

Adopt alexa-driven prompts to trigger AI-powered, scale-ready scene changes directly inside Premiere Pro, After Effects, and DaVinci Resolve, delivering high-speed edits, improved accessibility, and consistent dialogue pacing.

Core concept blends soliconcepts with customization panels, enabling management of prompts, capture of dialogue cues, and posting-ready outputs. A centralized dashboard tracks tasks, avatar-driven prompts, and AI-generated suggestions from chatgpt, supporting worldwide teams to easily reach alignment on look and timing.

Implementation steps include crafting guidelines addressing recognition, dialogue, and training data; ensuring standards compliance; enabling batch processing to produce multiple variants; maintaining an accessible naming convention for scenes and avatars. The workflow keeps track of prompts, prompts history, and outputs, with an easily auditable trail supporting posting and review.

Practical tips: connect alexa to a lightweight scripting interface, expose dialogue cues as metadata, and log outcomes in a dashboard. Use chatbots to test prompts, iterate quickly, and scale-ready pipelines across studios worldwide. This approach helps teams manage assets, maintain brand consistency, and accelerate content posting while respecting accessibility guidelines.

| Suite | AI-driven setup snapshot | Dialogue prompts | Output benefits |

|---|---|---|---|

| Premiere Pro | Integrated menu prompts, AI-assisted scene shifts, metadata tagging, scale-ready | alexa cue: “soft cut”, “hold”, “fade in”; chatgpt suggests variations | Speed, accessibility, repeatability |

| After Effects | Animation-driven scene shifts, keyframe templates | Dialogue cues, avatar cues, soliconcepts templates | Rich visuals, brand-consistent pacing |

| DaVinci Resolve | Fusion-like prompts, color-safe scene shifts | Recognition cues, track IDs | Color-accurate, scalable |

Design effective transition presets: timing, easing, and motion matching for different video styles

Baseline presets set timing at 300 ms, easing as cubic-bezier(0.22,0.8,0.24,1.0), and motion matching that preserves end velocity across clips. This results in saving time while maintaining edit flow and helps enhance engagement.

Style mapping covers cinematic narratives, educational explainers, lifestyle showcases, sports montages, and live-action events. Quick cuts: 150–240 ms with crisp ease; cinematic arcs: 350–500 ms with gentle ease-out; wide movements (pan, zoom) 400–700 ms to avoid jarring shifts. Each preset should support adjusting controls, be scale-ready, and designed to maintain motion cues across edits, improving engagement for audiences.

Motion matching techniques: align end positions, scale changes, and directional vectors across clips. When objects move, preserve movement direction with consistent velocity, based on real-world timing. This supports human perception, keeps edits coherent, and produces consistent outcomes.

Audio synchronization: align crossfades with the beat grid; apply a light crossfade to reduce pops; keep audio envelopes consistent to avoid distractions while maintaining rhythm. Such cues improve alignment with audiences.

Platform-wide management: save presets as a file library across platforms; include metadata such as style, timing, easing, and movement to speed up development. A linguana management approach standardizes naming, tagging, and usage, improving efficiency, saving time, and reducing pricing surprises encountered by clients. This approach works in such scenarios as marketing clips, training footage, and real-world productions, designed to produce consistent outcomes.

Automate production: templates, scripts, and batch rendering to scale projects using Alexa

Create a centralized template pack and a script-driven workflow, then run batch renders with Alexa to scale production across projects. Use existing assets–clips, edits, and color profiles–and store them as files in a consistent folder structure to support automating assembly. This setup reduces repetitive work, boosts consistency, and accelerates editor feedback. Start with 8–12 templates covering openings, lower-thirds, captions, overlays, and color treatments; attach metadata to assets to enable automated assembly.

Automation specifics: templates define structure; scripts apply edits, color ramps, and backgrounds; Alexa coordinates real-time rendering, asset fetch, and auto-captioning. Leverage generated models to adapt length to trends; feed ideas for feature moments; transform rough cuts into viewer-ready clips; maintain attention and reach. This setup enables future demand handling.

Performance and metrics: This approach optimizes throughput across batches. Real results justify scale. Track spikes in render times and adjust batch size; keep a log of decisions; ensure fast grab of assets; optimize I/O; deliver lightning-fast previews; measure reach and viewer response.

Future-proofing and governance: align templates with trends; keep editor-friendly file formats; let Alexa suggest edits and color variants; encourage creative experimentation by testing ideas; export pipelines to common formats; track performance to inform next cycle.

Quality assurance: testing timing accuracy, visual continuity, and cross-device playback with Alexa devices

Begin with a concrete baseline: align cue points within ±50 ms across multiple devices including Alexa-enabled speakers and Fire TV. Save results in a detailed log and compare against the published schedule.

- Timing accuracy

- Define target triggers: each action or cut aligns with a meta cue in the track; store cue timestamps in a saved manifest.

- Run automated checks across multiple devices: Echo family, Echo Show, Fire TV, mobile apps; use autonomous assistant to simulate user actions and measure drift.

- Compute mean absolute error and establish pass/fail thresholds; attach evidence files and flag discrepancies so they can be remediated.

- Visual continuity

- Verify frame-by-frame alignment between cuts and effects; ensure resolution remains consistent across outputs and platforms.

- Audit color grading, lighting, and motion to maintain a realistic mood that matches emotions conveyed by the scene.

- Evaluate turning points and editing actions; confirm that music cues and volume levels stay synchronized with on-screen changes.

- Cross-device playback with Alexa devices

- Test streaming across Echo devices, Echo Show, Fire TV; confirm that playback starts, pauses, and resumes exactly when expected while preserving track alignment.

- Validate voice commands and answering interactions that affect playback; ensure command latency stays below a defined threshold.

- Assess publishing quality of content with characters and specialized content types; confirm that generated assets retain resolution and clarity through a variety of screens.

- Reporting, publishing, and improvement loop

- Saved results populate a community-driven dashboard; summarize trends and actions, share with audiences, and guide publishing decisions.

- Leverage an autonomous assistant to draft incident reports, route issues to specialized teams, and update schedules for regression runs.

- Maintain a schedule of multiple test cycles; keep variations of content to track resolution, track issues, and ensure consistent experience across devices through a variety of screens.

Die besten KI-Tools zum Erstellen fesselnder Videokürzungen" >

Die besten KI-Tools zum Erstellen fesselnder Videokürzungen" >