Begin by defining a single use case and securing explicit consent before any data handling.

In an educational setup, outline a single, valuable use case and establish privacy boundaries. typically, the system serves as a chatbot that answers questions, explains concepts, and assists users through tasks, ensuring responses are accurate and verifiable. The plan maps directly to business goals, expands wider audience reach through onscreen prompts and overlay visuals, and relies on software supporting prompt-to-video workflows. This approach yields a tangible benefit, good user satisfaction, and a practical means to verify success; a photo-based identity check can be integrated, and a warner alert can trigger when sensitive data is requested. Functionality rises when the feature set aligns with real needs, matches user intent, and scales toward more complex scenarios.

Choose a lightweight overlay approach to display the AI persona onscreen, using a responsive chatbot backbone and software that supports audio, video, and text synthesis. Prioritize functionality that could deliver natural speech, retain context, and support prompt-to-video workflows. Test across devices to ensure consistent appearance and interaction, and plan for instant content updates to keep the experience educational and engaging.

Security note: The system should adhere to consent, data minimization, and transparent logging. for wider adoption, ensure data never leaves a user’s region without permission, and provide users control to delete or export data instantly. This matters for global markets such as forex, where compliance risk is high and onboarding requires clear disclosures. The setup should include a simple fallback if the internet is unavailable, ensuring the local cache is encrypted and removable.

When the persona is designed, give it a distinct name like Seth and train responses to mirror a consistent voice; this helps match user expectations and builds trust. The educational value compounds as users see the same reasoning pattern across sessions, delivering a reliable benefit aligned with broader business goals. Keep the workflow lean so updates could be deployed instantly, and collect feedback to refine prompts, assets, and finishes. The end result should be wider adoption, good retention, and a scalable path to chat-enabled experiences that correspond to real needs.

Define Your Persona, Use-Cases, and Key Metrics

Build a three-attribute persona: target segment, speaking style, reliability. Then identify four use-cases and assign a metric to each to quantify impact in seconds.

Persona design

- Audience: define the target segment (role, industry, company size) to align language, examples, and scenarios, enabling steady producing of relevant content.

- Tone and speaks: establish a dynamic, human-like voice; map four tone options (concise, friendly, formal, empathetic) to different contexts so youre outreach feels natural across times and screens.

- Channel, screen, and medium: default to screen-based chat interfaces; specify when to escalate to voice or other medium to preserve engagement across devices.

- Guardrails and trust: adopt trusted, warner-style safety checks; implement edge-case handling to protect users and brands.

- Creation and editing workflow: use a builder to assemble prompts and responses; include edit and enhance steps to keep content aligned with policy and brand guidelines.

- Assets library: maintain a reusable repository of prompts and responses; ensure consistency across those assets and created material used in campaigns.

- Data posture: tag inputs for privacy and consent; enable quick edits to adapt to evolving rules while maintaining a consistent voice.

Use-Cases and Metrics

- Customer-support chatbot on screen to answer common questions; goal is rapid resolution and reduced friction, measured by seconds per interaction and engagement per session.

- Product tour and onboarding across a site’s medium; aim to increase completion rate and shorten time-to-value, tracked via clicks and time spent on each step.

- Sales outreach assistant for targeted campaigns; focus on higher-quality outreach, with metrics tied to click rate, engagement, and conversion signals.

- Internal training and knowledge companion for teams; emphasize created content usage, consistency, and adoption across departments.

- Impact: quantify changes in engagement and conversion, linking outputs to business goals and campaigns.

- Engagement: monitor the share of sessions that trigger a follow-up action, a proxy for resonance.

- Click: track click-throughs per prompt to judge prompt relevance and clarity.

- Seconds: measure average handling time; aim for steady improvement as prompts are refined.

- Consistency: score responses on tone and accuracy across times and channels to ensure a trusted experience.

- Created: count prompts, scripts, and conversation templates produced weekly to gauge production velocity and scalability.

- Outreach: quantify reach across multiple channels; ensure the builder supports multi-channel deployment and synchronized updates.

- Discovery: identify gaps in coverage; schedule discovery reviews to uncover those gaps and fill them.

- Human-like: benchmark user perception of realism; use user surveys to tune the medium and language used by the bot.

- Quality and safety: monitor for safe completions; apply warner-style checks to maintain trusted interactions.

Collect, Prepare, and Label Voice and Visual Data for Training

Start by securing informed consent from participants and establishing a permissive license for their contributions. Design a data plan, which targets audiences across demographics, ensuring voices and onscreen appearances reflect a range of accents, looks, and environments. Offer participants the option to subscribe to project updates and credit every contributor in a transparent credits record. Set opt-out provisions to allow withdrawal and think about how consent can be refined until the project concludes. This approach benefits business while upholding etický handling of data.

Voice data: capture 5 to 10 second clips per speaker across several sessions to reflect tempo, cadence, and emotion. Target 20 to 40 samples per person; use a minimum 16 kHz sample rate with 16-bit PCM; avoid clipping by normalizing peaks and documenting loudness ranges. Record environment noise levels and devices used. Include those samples from those ones who consented, ensuring that every voice speaks clearly and feels natural across casual prompts and more formal prompts.

Visual data: record onscreen appearances under three-point lighting, using multiple angles, and varied wardrobe and backgrounds to simulate daily usage. Prefer 1080p or higher, 30 fps; ensure stable framing and proper exposure; label frames with resolution, framing, background, and lighting notes; maintain looks consistency across devices. Use translation cues in captions when applicable and ensure visuals align with the audio content.

Data labeling workflow

Set up a labeling schema covering speaker_id, language, locale, emotion, lighting condition, background, wardrobe, camera angle, and licensing. Attach metadata such as sample_length, sample_rate, license, and credits. Use unique IDs for sources and record consent status and translation notes. Validate labels through intercoder reliability checks and resolve discrepancies until alignment is achieved. Maintain a centralized log to track revisions, approvals, and contributor credits. Be prepared to adjust the schema as features emerge, so the system can discover patterns and stay accurate.

Ethical and operational guardrails

Protect privacy by de-identifying data where feasible; restrict access to authorized teams; enforce retention limits; credit participants; ensure the data deliver value to business while staying aligned with etický norms. Avoid deceptive uses; allow withdrawal; manage licenses for background music or logos; ensure translations align across languages and subtitles look accurate for onscreen text. Maintain a changelog and audit trails for every modification. This framework supports silný, generativní aktiva pro chatbot personas while preserving audiences trust and credit.

Pick Tools: Avatar Engine, Speech Synthesis, and Integration Stack

Recommendation: Choose a modular stack: Avatar Engine for rigged avatars and lip-sync, a Speech Synthesis service with SSML and multiple voices, and an Integration Layer that orchestrates assets, triggers, and export pipelines. Verify commercial licenses, API reliability, and predictable costs to support frequently updated demonstrations, educational outreach, and translate needs across teams. Plan a pacing that keeps the flow smooth and a seamless handoff from scripting to stage. Build four core asset tracks: outfit variants, pose and hand gesture cards, and metadata that guide stories. Use luxor personas and seth as demo cards to refine craft, scratch the visuals, and stay aligned with audience needs. Ensure asset sizes stay down and the export path remains lean for quick demos.

Avatar Engine, Hands-on Scripting, and Export Paths

Avatar Engine evaluation: check viseme coverage, lip-sync fidelity, rig quality, and export options such as GLTF/GLB or FBX. Favor engines with scripting bindings in JavaScript or Python and event hooks for turn changes, voice playback, and asset swaps. Confirm four avatars can run in parallel during demos while keeping a lean footprint through modular outfits and gesture cards. If a library like heygens exists, verify import flow and asset compatibility. Plan a clean hand-off from concept to demo and maintain a scratch-ready path to speed iterations.

Speech Synthesis, Localization, and Integration

Voice quality matters; pick voices that speaks clearly with natural prosody, and tune rate, pitch, and pauses via SSML. Ensure translate needs are covered for captions and transcripts; provide multiple voices for different stories. Export transcripts and captions as cards in the asset library, with a preferred workflow for downstream applications. The Integration Layer should expose endpoints for real-time prompts, telemetry, and export destinations. Keep the data path down to minimize downloads and ensure seamless handoffs from audio to scene. Focus on educational demonstrations and stories for outreach needs, while scripting to synchronize user turns with lines spoken by avatars. Planning with four outfits across scenes reduces asset churn and keeps the user experience smooth. Ensuring need is met and align with preferred innovations keeps you ahead.

Prototype Interactions with Safety Filters and Content Rules

![]()

Apply a layered safety gate at the session input: route messages through a content rules engine, a sentiment guard, and a quick human-in-the-loop flag before rendering. Renders occur only after checks pass to avoid unsafe outputs. This keeps control price predictable and accelerates rapid iteration during testing while preserving user experience.

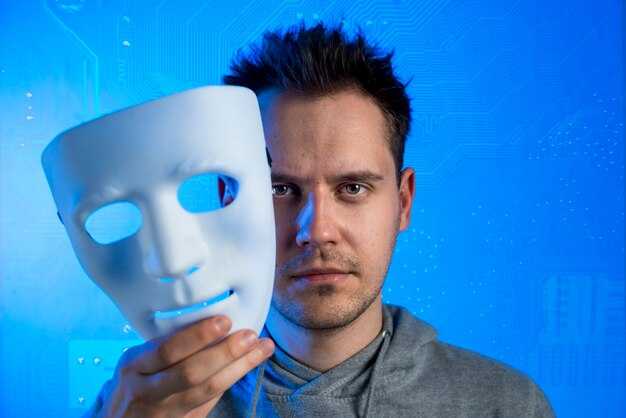

Anchor decisions in formal training standards: ensure examples align with pediatric guidelines and that messages avoid disallowed topics; especially enforce moderation for casual chatbot interactions and avatar persona disclosures. Note: Being transparent about model status reduces ambiguity for casual users during production.

Disallow cloning of real persons: privacy and safety rely on explicit limits on identity and ownership; logs track prompt origins and actions to support accountability and credit to the safety team.

During planning, set a price cap for risk and use a budget for risk mitigation; define a rate for unsafe outputs and track incidents in a dashboard to adjust policies in production.

In testing, simulate edge cases by using mock prompts that resemble abuse, misinformation, or privacy threats; run rapid cycles of prompt editing to keep outputs good; use synthetic data to broaden coverage and gain insights for transforming the user experience.

In demos meant for player experiences in casual contexts, manage expectations with clear boundaries; include on-screen notices for prototype status; ensure sound cues indicate generated content; maintain full provenance of outputs and decisions; verify attire cues and avatar appearance to avoid misrepresentation; align budget with risk controls in production. Publish a controlled video on youtube with prototype labeling and a clear disclosure of limitations. Attention to user education remains essential during demos.

Safety Controls and Content Filtering

Establish layered filters: linguistic, contextual, and persona constraints; require edit of dubious outputs before sending; implement policy checks and store a log trail for audits; ensure pediatric safeguards and limit medical advice for minors; use training routines to refresh the filter models.

Measurement, Testing, and Production Handoff

Track metrics: false negatives, response time, and user reports; run weekly testing sprints; ensure full production readiness by validating with a subset of users and collecting insights; ensure credit where due and maintain an incident log for each tweak.

Set Up Ongoing Updates, Maintenance, and Version Control Schedule

Initiate a monthly update cycle led by a dedicated specialist who reports to the founder; this ensures professional-looking updates with clear accountability.

Maintain a ground-truth revision log for assets, scripts, configurations, and models, storing everything in a centralized repository to enable controlled rollbacks.

Steps to implement: 1) collect ground recordings and green renders to verify outputs; 2) tag each change with a descriptive note for such updates; 3) run a generative, conversational test suite; 4) document outcomes and update the skills matrix.

Define a release-gate process: green signals on passes, a formal sign-off by the specialist and a quick risk assessment before propagating to mobile and production environments.

Plan maintenance windows: monthly checks of recordings, renders, and script integrity; perform small, frequent tweaks instead of large rewrites, to keep movements and human-like cues coherent and laserfocused.

Testing and validation: run micro-tests on movements and human-like cues, verify answer accuracy, and validate conversational coherence across channels; ensure the process does not introduce latency.

Data governance: tell stakeholders the changes, maintain only approved datasets, ensure security and privacy on mobile devices and across access paths.

Metrics to track: most critical signals include latency of answering, realism of renders, fidelity of script, and consistency of ground-truth references.

Quality gate: maintain a laserfocused review cadence every month that checks for drift in movements, emotional tone, and novelty of responses; filter out any misalignments.